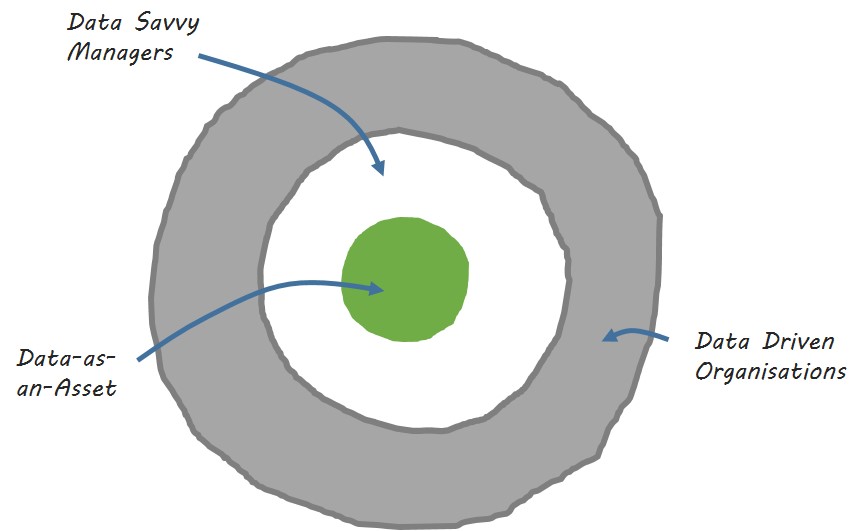

There is an estimate that for every one data scientist there is a need for ten data savvy managers in today’s economy. Reason being, that pretty much all managers need to build their own personal data capabilities to stay relevant and effective in a world where more and more of our work is digitised. But what does it mean to be data savvy? What does it entail? Or more importantly how can one become more data savvy? To aid the answering these questions, a few simple tasks are outlined which cover each of the steps of the Data Value Map. While completing each of these tasks may not turn you into a Chief Information Officer (CIO) they provide excellent ‘bang for buck’ in setting you on the road to becoming more data savvy.

Value – leveraging organisational data assets: There is no doubt that there is a lot of talk around big data and the value that can be gained from making big data investments. I’m not debating the validity of these claims but do feel that there is more than enough opportunity to derive value from small data before you start to think big. It puzzles me my companies go headlong in to big data investments to provide predictive analytics capabilities when they have issues in answering basic business questions like: how many customers do we have? To demonstrate how much potential value there is in small data, Dr. Tom Redman has a simple rule of thumb that outlines: a task done with bad data is ten times more costly than a task done with good quality data. It must be said that from having used the rule a lot, the factor of 10 estimate is actually quite conservative. The truth is billions is being lost as a direct result of utilising bad quality data (eg incorrect customer names or addresses), but because organisations are dealing with it so much (and so often) they have become blind to its cost. So, first bit of advice in becoming data savvy is to look after the small data and the big data will look after itself or in other words focus on the low hanging fruit of small data before you reach for big data investments.

Delivery – supplying analytical results in a suitable format: The choices in formats and visualisations for data is growing by the day and is often a key differentiator in data analytics packages, as they overlay data on maps, represent it on timelines, or aggregate it on a heat-map. While these features provide excellent flexibility to align the delivery of data to the needs of data consumers, even the simple act of adding colour to your data can make significant impacts. Experimenting with colour will also give you an insight into how data is perceived through different eyes. For instance, red is universally associated with stop and also danger. Yet these can mean very different things in different contexts. If you use the colour red with the intention to communicate a stop action but your users interpret it as danger, they may act in total opposition to what you expect under the assumption that more action is needed to move from danger.

Analysis – processing analytics on subsets of data: The role of a data scientist is one of the highest paid at the moment and rightly so. To become a data scientist involves a lot of core programming and statistical training, which is tough even for people who have a strong affinity to maths. Then after you are qualified the work of a data scientist is quite daunting as you have to wade through huge datasets that may even need cleaning before you can actually start doing some analysis. But being an expert in data analytics doesn’t necessarily mean you know how the business works. To bridge this gap between business and analytics expertise and to fully utilise the capability of data scientists, managers need to start asking or thinking of business questions in data science or statistical terms. This will provide the basis of a common language from which the data scientists can apply their knowledge with strong business impact. To do this business managers need to be familiar but not expert in statistical techniques, which may still sound daunting but can be much easier if get your hands one of the many statistic books aimed at business people.

Integration – combining datasets from numerous sources: You would expect that almost everybody in business should be able to define what a product and a customer is. But the reality is basic definitions can prove to be very difficult. To see why it becomes difficult try the simple exercise of defining what a sandwich is. Once you are set with your definition see how it categorises a club sandwich, a wrap, a burger, a breakfast roll, or a taco and you get to see how quickly basic definitions become a tricky task. In one of his recent talks at the Prof. Tom Davenport highlighted how the task becomes even harder when people are very passionate about the subject. In a particular instance he came across a case in which the employees of an airport had over 30 definitions of what an airport is. For pilots it was a place where you land planes, whereas air traffic controllers believed it included the airspace and flight paths. Getting a consensus on these definitions, be they customers, sandwiches, or airports is an essential data integration step and one that can begin by asking yourself how you define your key business concepts. Also, once these are defined you are in good shape to start building your data model.

Acquisition – gathering data from business activity: With the right data sources organisations are able to build strong insights into their business and make decisions with confidence. However, one of the issues many organisations have is that they rely too heavily on spreadsheets throughout the each part of the Data Value Map. Spreadsheets are an excellent format to deliver data to consumers but in places such as the acquisition phase the negative impact of using spreadsheets as a source can be quite significant. Being cognisant of the dangers of spreadsheets is an essential first step in reducing reliance if not totally eliminating spreadsheets from phases other than delivery. The issue with them is that they are often a source of very poor quality data due to the amount of inaccuracies, duplicated and distributed copies, which stems from its openness to manual entry and difficulty in controlling their usage and dissemination.

Governance –promoting behaviours to ensure data quality: Data governance is not the most exciting of tasks to undertake (which is probably why so many companies don’t do it), but is of vital importance to a strong data capability. To put oneself in the right mind-set for governance always place people before technology when discussing data initiatives. Technology is often viewed as being the complicated part of the solution, but for the most part technology does what it’s supposed to do. People on the other hand are a totally different proposition. Essentially the behaviours of people make up the biggest challenge to becoming more data driven. Understanding what people really want, getting buy-in from users, or facilitating changes in roles/processes are just a few examples of these challenges. Furthermore, by putting people first, reduces the risk of becoming enamoured with technology and it’s promise to solve all your problems, which again is a behavioural/perception issue and not the fault of technology.

Last word: Keep in mind that the involvement of others in these tasks is vital to their success and with a bit of effort, steps such as these have proven to be excellent pointers in developing a Data Savvy mind-set and the start of a foundation from which organisations begin to appreciate their data as an asset and not a cost.